The workplace assessment space can get crowded, at times making it difficult to remember which tools measure what. As an HR professional, you want to feel comfortable with every aspect of your hiring process. The credibility of your organization and employer brand are reflected in the candidate experience, which means the validity of any assessments you use for hiring is paramount.

Construct validity refers to how accurately a test or tool measures the theoretical concept – also known as “construct” – it’s designed to measure.

In other words: Is the test measuring what it’s supposed to? Does the test predict what it’s supposed to predict?

In this post, we’ll break down construct validity, including what constructs are, how to assess construct validity, why it’s vital to HR leaders and managers, and how to choose scientifically validated assessments for effective hiring decisions.

What are constructs?

In the research field, constructs are abstract concepts that are not directly observable or measurable. For example, you can’t measure someone’s talent or happiness with a ruler.

Some common examples of constructs include:

- Happiness

- Sadness

- Talent

- Intelligence

- Anxiety

- Optimism

- Motivation

- Self-esteem

- Conscientiousness

- Introversion

- Extroversion

- Resilience

- Empathy

- Critical thinking

- Cognitive thinking

- Employee engagement

- Productivity

- Job satisfaction

- Customer satisfaction

Constructs are essential to HR practices since they can provide a framework for HR professionals to assess and manage talent. Without constructs, it would be impossible for an HR team to pinpoint things like organizational fit, communication skills, cognitive ability, and job performance.

By ensuring the validity of these constructs, HR professionals can make more informed decisions when it comes to talent recruitment and optimization.

Optimize the entire talent journey.

Talent Optimization Essentials is the all-in-one, science-backed solution to hire, develop, and retain top talent.

Types of validity related to construct validity

There are a few different kinds of evidence, or separate types of validity, that help to establish construct validity.

Convergent validity refers to how consistent a measure is with other measures of the same or similar constructs. If your measure is valid, it should be consistent with other testing tools designed to measure the same thing.

Discriminant validity refers to how much a measure is not consistent with measures of different constructs. It verifies that your measure is easily distinguishable from separate, unrelated constructs.

Content validity evaluates whether the content of your measure effectively represents all aspects of the construct being assessed.

Criterion validity evaluates how effectively a measure predicts an outcome or “criterion.” It has two subtypes: predictive validity, which measures how well a test predicts an outcome in the future; and concurrent validity, which measures how well a test correlates with an outcome measured in the present moment.

How to assess construct validity

So how can HR leaders and managers apply this construct in the day-to-day business world? How can you accurately assess construct validity when it comes to assessments designed to optimize talent recruitment and employee performance?

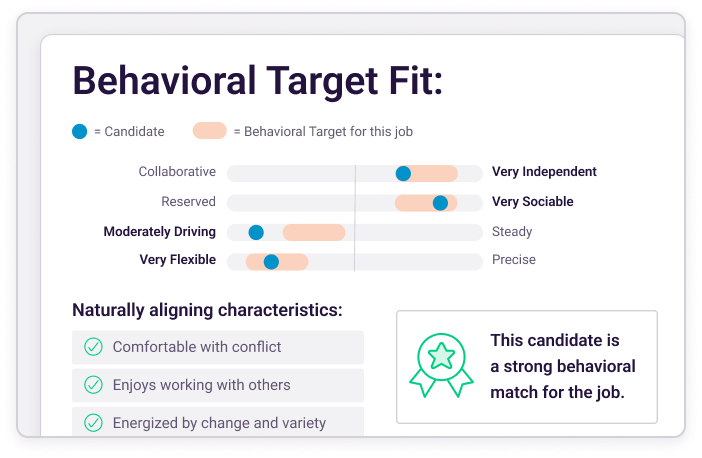

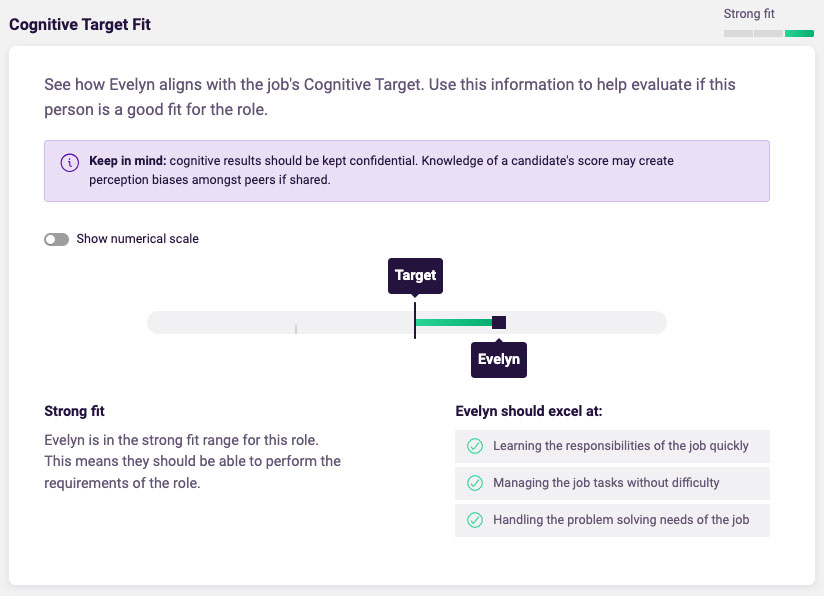

Let’s use the example of The Predictive Index Cognitive Assessment. In essence, the CA aims to assess, and establish, five types of validity:

- To assess convergent validity, a cognitive thinking assessment should show a strong correlation with other established cognitive thinking assessments.

- To assess discriminant validity, a cognitive thinking assessment should show little or no correlation with a different kind of assessment, such as a physical strength test.

- To assess content validity, a cognitive thinking assessment should measure a range of cognitive ability categories, such as verbal, numerical, and abstract reasoning. This ensures the assessment provides an accurate and comprehensive measure of someone’s cognitive ability. If the assessment focuses on numerical reasoning (math skills), but neglects verbal reasoning (reading comprehension), it will have low content validity and therefore won’t be effective.

- To assess criterion validity, you would ask employees to take the cognitive ability assessment, and then gather data on their job performance for a period of time afterward. If employees who scored well on the assessment show a consistently high job performance, and employees who scored poorly show a consistently poor job performance, the test has strong predictive validity.

- To establish concurrent validity, you would compare employee test scores with their current performance data. If an employee scores well on the cognitive ability assessment and also has consistently excellent job performance data, the assessment has strong concurrent validity.

In other words, criterion validity demonstrates the practicality of the cognitive ability assessment – how consistently it predicts and reflects the job performance of your employees.

Why construct validity is important for HR leaders & managers

Construct validity is essential to HR leaders and managers who want to leverage personality, cognitive ability, and any job assessments that are accurate, relevant, and validated scientifically. It ensures the assessments you choose to use are founded on solid principles and variable testing.

If they’re not, then you might want to reconsider whether they’re appropriate for the workplace.

Without construct validity, you risk making hiring decisions that are based on inaccurate or irrelevant measurements, which could result in poor hires, reduced productivity, employee disengagement, and increased employee turnover.

Benefits of construct validity for HR professionals:

- Assists in identifying high-performing employees

- Supports fair compensation for high-performing employees

- Minimizes risk of bias or discrimination in hiring

- Strengthens any legal defense for HR decisions

- Provides reliable, data-driven insights into employee performance

- Helps improve employee retention rates

- Enhances employee engagement and incentivization

- Bolsters HR credibility through validated workplace assessments

Best practices for HR leaders & managers

According to The Standards for Educational and Psychological Testing, the foundations of any practical and effective employee assessment include fairness, reliability, and validity.

As an HR professional, when choosing an assessment, ask yourself the following questions.

Fairness:

- Does the assessment evaluate all members of the population—regardless of race, religion, sex, age, and sexual orientation—in the same way?

- Is the assessment culturally sensitive and free from bias?

- Does the assessment present any risks of adverse impact?

- Are the assessment procedures standardized?

- Are the questions relevant to the job description?

- Is the assessment user-friendly and accessible for all members of the population?

Reliability:

- Is the assessment precise enough for its intended purpose?

- If a current or prospective employee takes the test more than once, are their scores consistent?

Validity:

- Have third-party experts reviewed the assessment?

- Does the assessment measure what it’s supposed to?

- Does the assessment predict what it’s designed to?

- Is there substantial evidence supporting the connection between the assessment and job performance?

- Has the assessment been validated for specific open roles?

At The Predictive Index, our dedicated Science Team observes, builds, and improves the defensibility of our assessments by administering ongoing research and validity studies. Our assessments, which are also reviewed by third parties, are continuously examined to ensure fairness, reliability, validity, and unassailable psychometrics.

Our timed PI Cognitive Assessment™ is a cognitive ability test for employment. It measures a person’s general mental ability and capacity for critical thinking. In only 12 minutes, you’ll have more information about a candidate’s likelihood of success and job performance than you would after a one-hour interview or standard aptitude test.